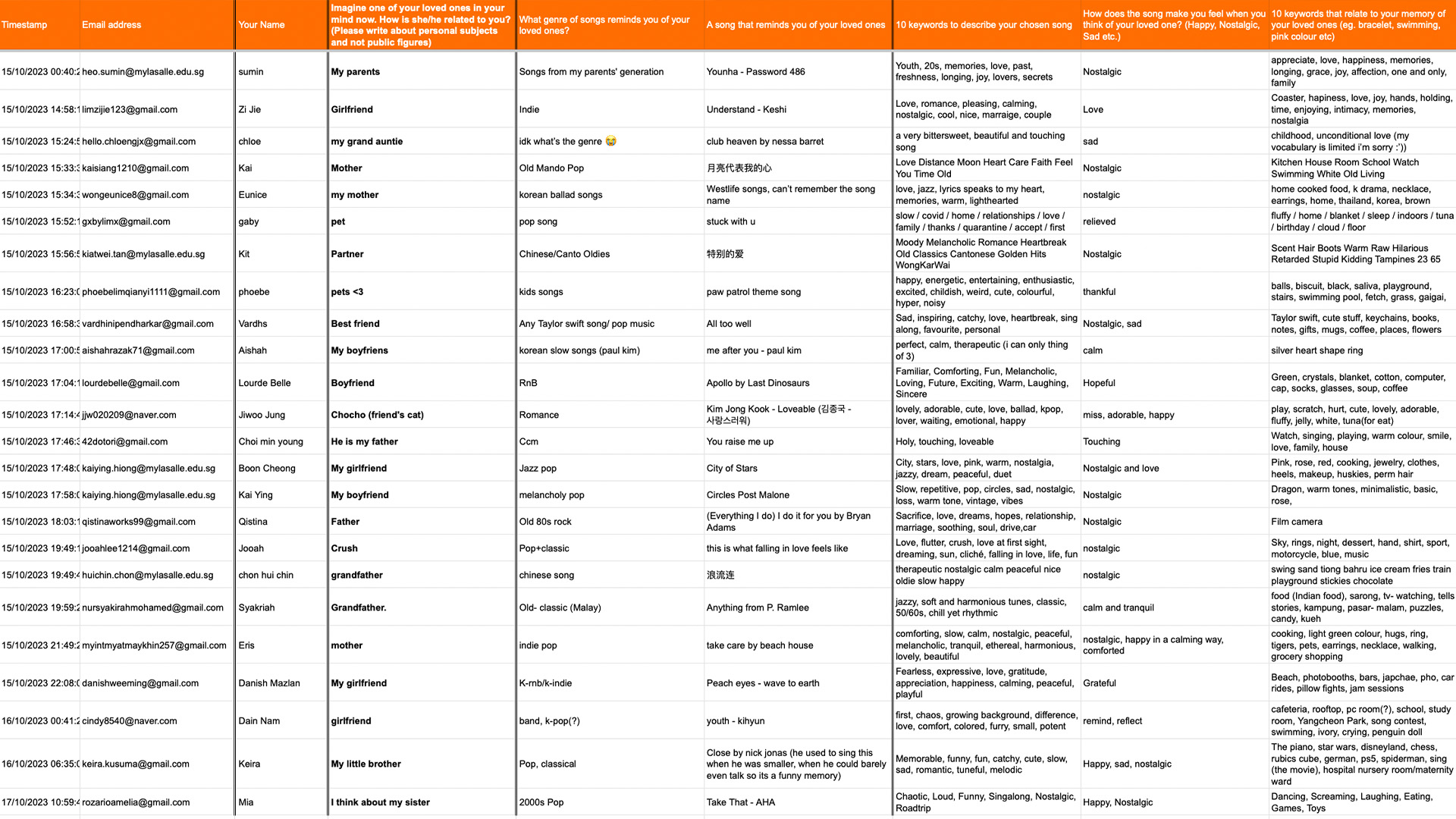

Findings

In our daily lives, we tend to remember faces and auditory cues more than the belongings that our loved ones carry. Auditory elements like their speech patterns and frequently played songs serve as important triggers that help us recall them. We found out that music has a profound ability to evoke emotions, trigger memories, and connect us with our loved ones. Certain songs become linked with specific events or people in our lives. When we hear these songs, they trigger memories associated with those moments or individuals. Music also has the power to convey complex emotions.

Hearing a familiar song can instantly evoke feelings of joy, sadness, love, or nostalgia, especially when it reminds us of someone that we love dearly. Music-evoked nostalgia has been identified as linking to solace and comfort, where memories of significant people or times that brought happiness provide calm and comfort, creating a sense of safety and acceptance.

Music plays a role in memory formation, hence we often associate certain songs with someone we love. Listening to music can evoke memories of moments spent with that special person, providing a means to revisit their presence, especially when physical distance, separation, or other circumstances have created a sense of distance or parting.

Unspoken emotions can also be conveyed through music. Music can express emotions that are difficult to put into words. Loved ones might dedicate songs to convey love, apology, gratitude, or other sentiments, creating a lasting memory tied to the music. Individuals can also find comfort in times of grief through music as a source of consolation. Listening to songs associated with a departed loved one can provide comfort, allowing individuals to feel connected to the person they have lost.

Join us on our journey as we show you our thought process, from Insights we gained, Design decisions, Challenges, Feedback, and Achievements.

Insights we gained

One of us, Darum, is an international student from South Korea. Therefore, she has come alone to Singapore to pursue her studies. Her family members, including her 2 cats, are back home in Korea. This had made us wonder if other international students, similar to herself, would miss their loved ones back in their hometown. Following this idea, we wanted to see if it is possible to generate more songs that are similar to that one song that reminds people of their feelings towards their loved ones.

We were also interested to explore how one song and a few keywords can intertwine with the reminiscence of people that someone holds to their heart dearly, creating the survey with questions that allow us to collect our keywords to feed the AI. We decided to push AI to generate a music playlist and visuals from the single recommended song and some human emotions. Using generative aesthetics, we further develop the visuals to match with the sound waves.

Design decisions

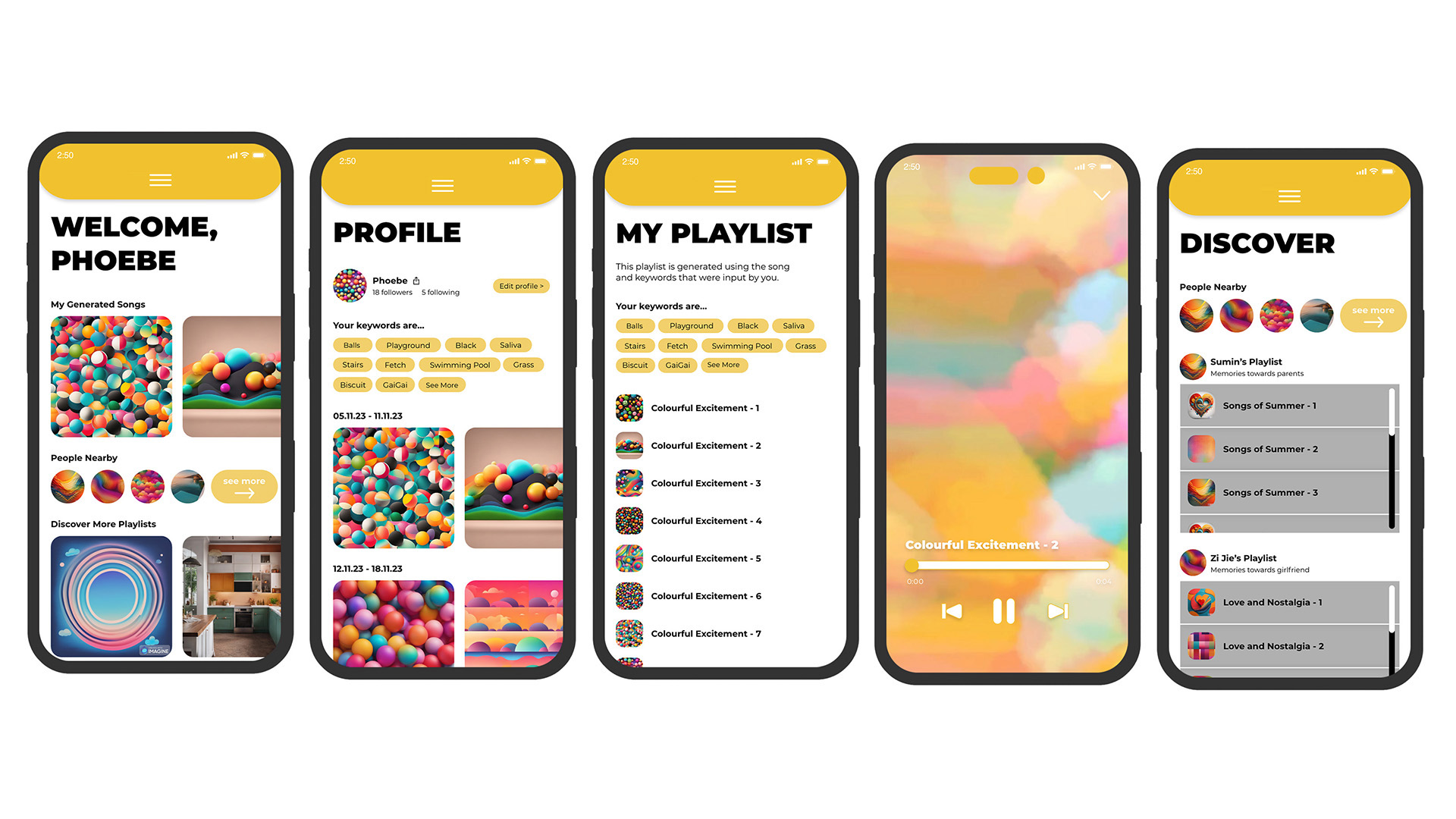

For our design decisions, we decided on our final outcome to be an app because the app is accessible anytime on the go and would be convenient to the users whenever they miss their loved ones.

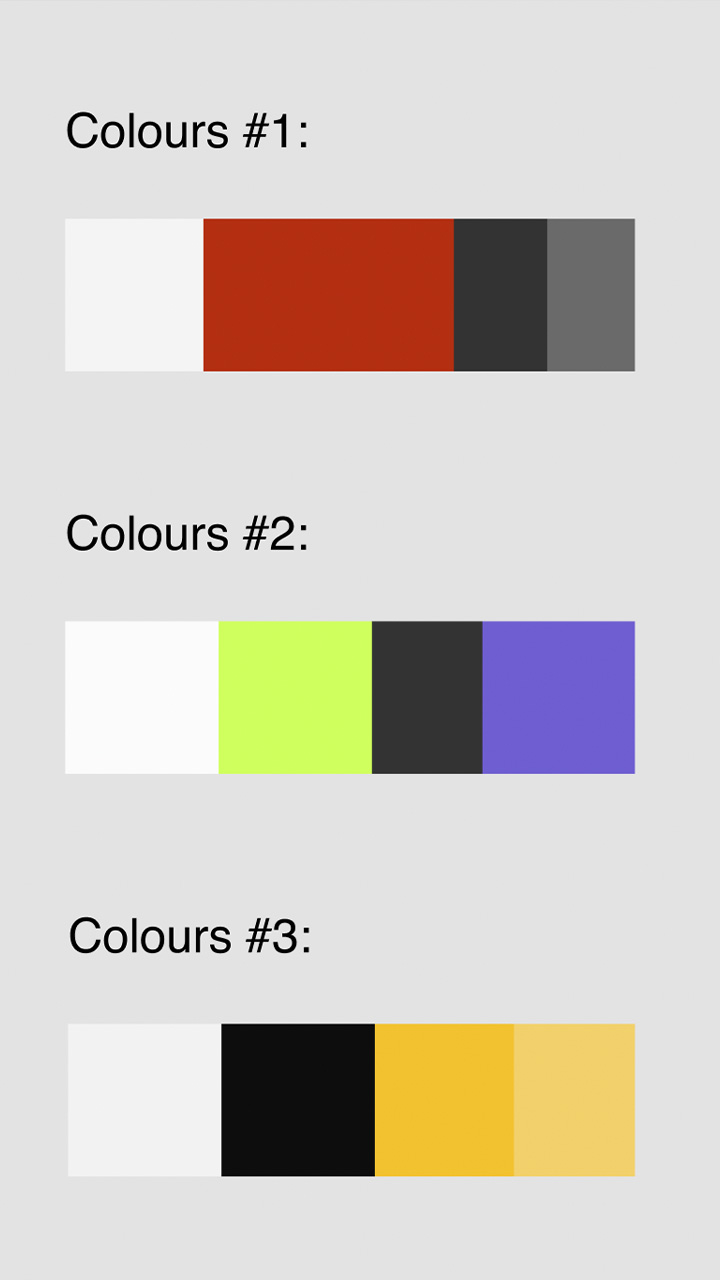

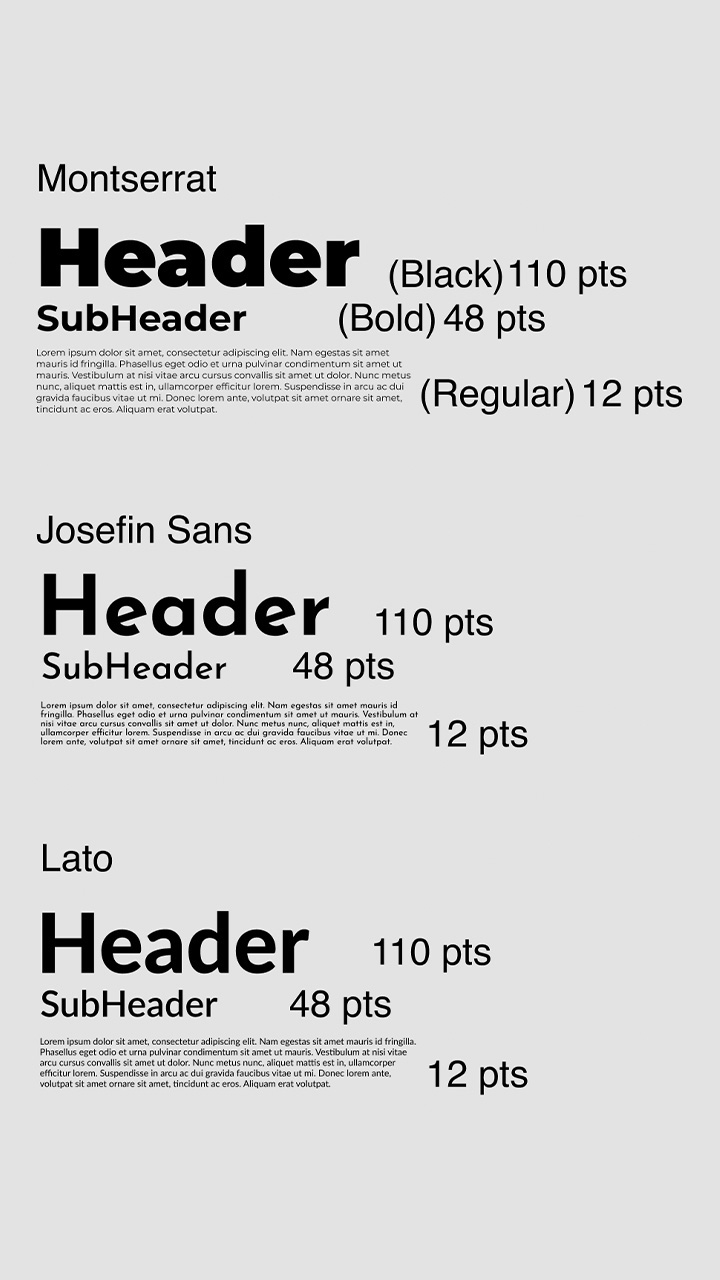

In the case of colours and fonts used in the app, we decided on colours that mainly included yellow and black among various options. This is because it was able to give a soft and warm feeling with a colour that was not too heavy. As for the font, we settled on Montserrat from a pool of candidates.

In the case of the overall app design, we set it up so that we could enter 'my profile' and 'my playlist', and also encouraged people to share other people's playlists through 'Discover'. This allows users to share their feelings about their loved ones.

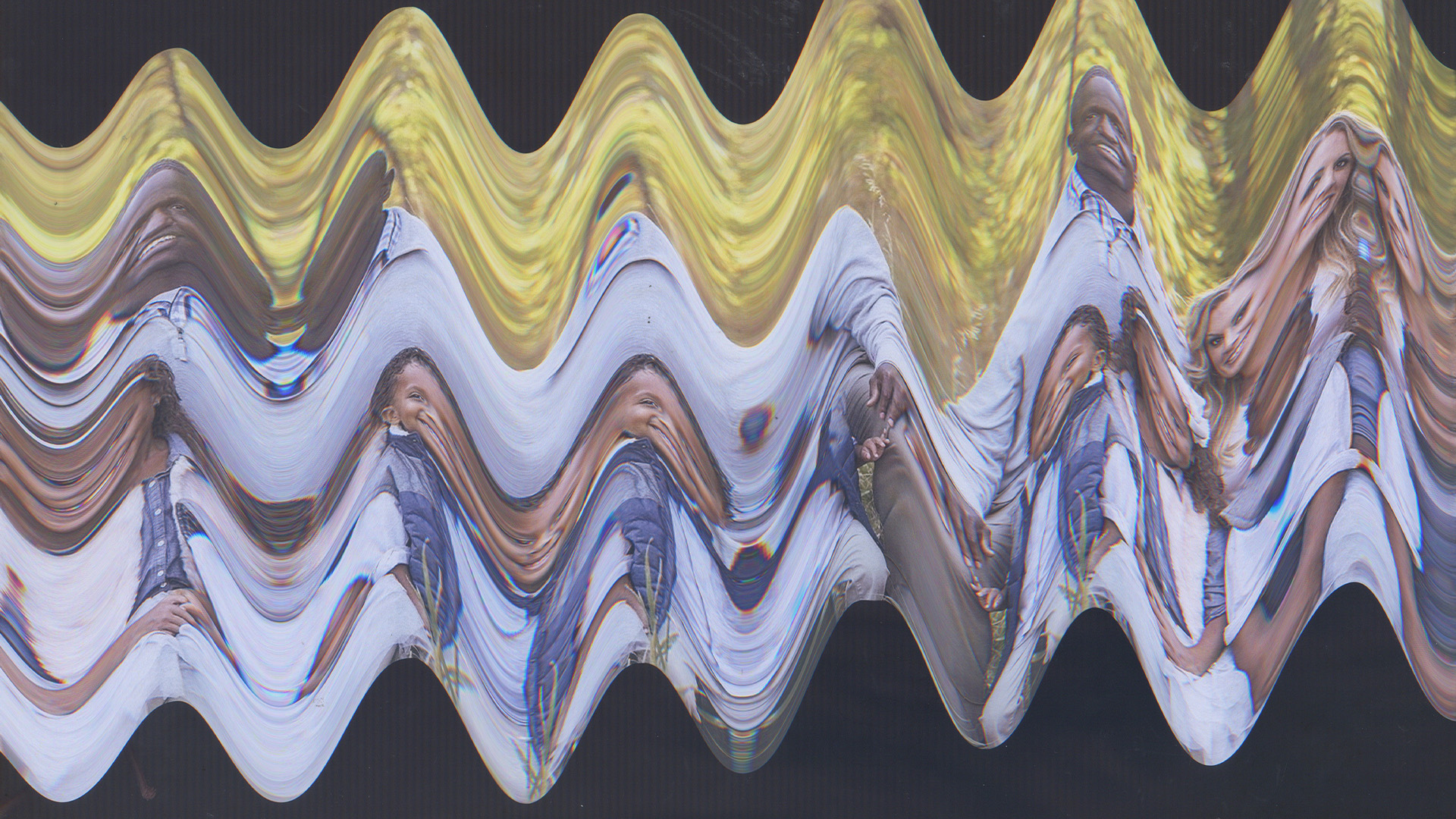

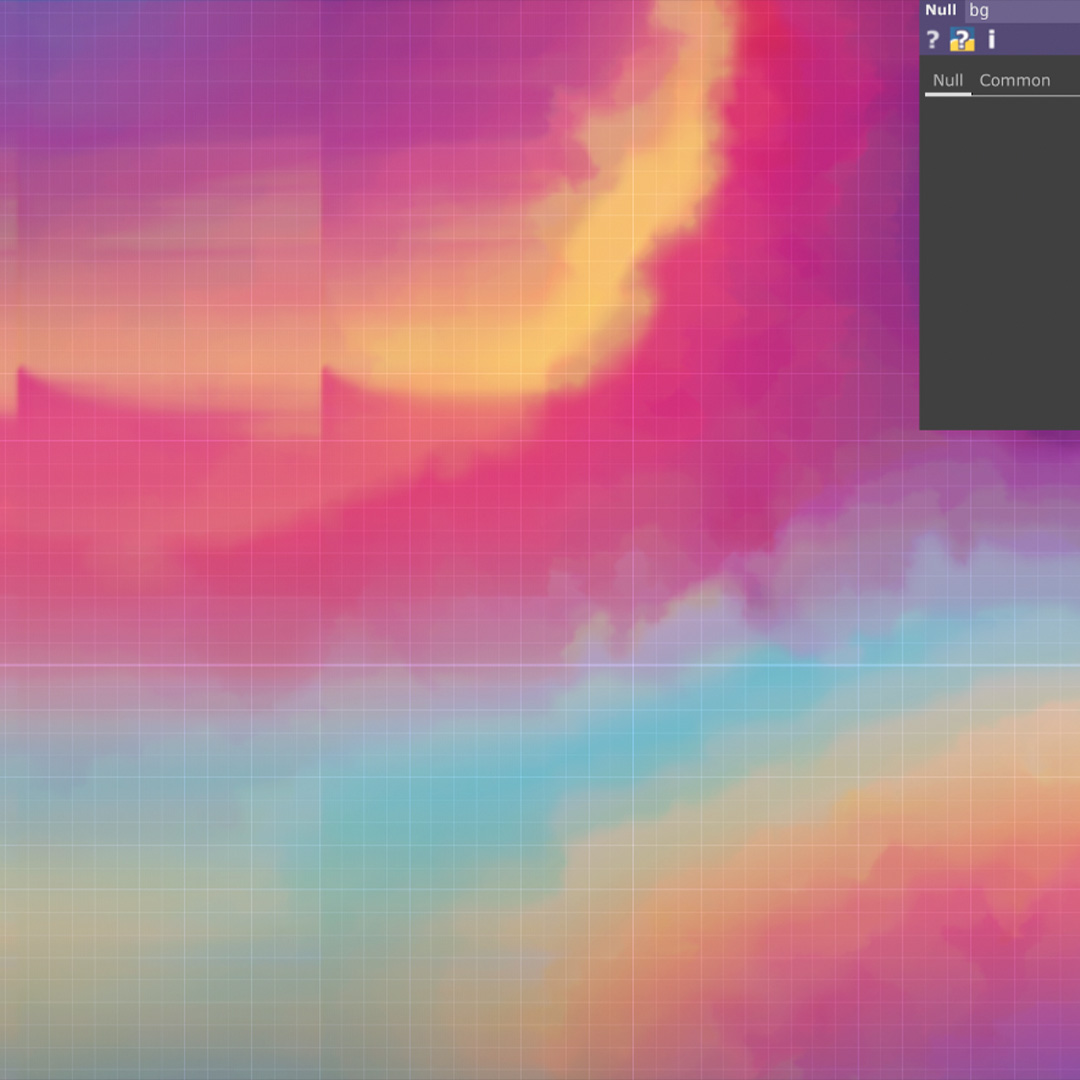

The integrated outcome featured a video combining watercolour and slit-scan techniques synchronised with audio. Crafted using Touch Designer, the video not only maximises the emotional impact through its connection to a loved one but also benefits from the expressive mood facilitated by the watercolour visuals.

Challenges

Pre-project Challenges

Our group was almost done with our project proposal but we faced some issues about people mentioning that they feel uncomfortable with our data collection because they felt like their loved ones' pictures are being played in a project. Bringing this up to my group mates, we decided to sit down and send an email to Andreas regarding the issue, showing him our current proposal and asking for some feedback regarding our project. We wanted to know if he thinks that we should proceed with this project or tweak some things so that it is less sensitive to others.

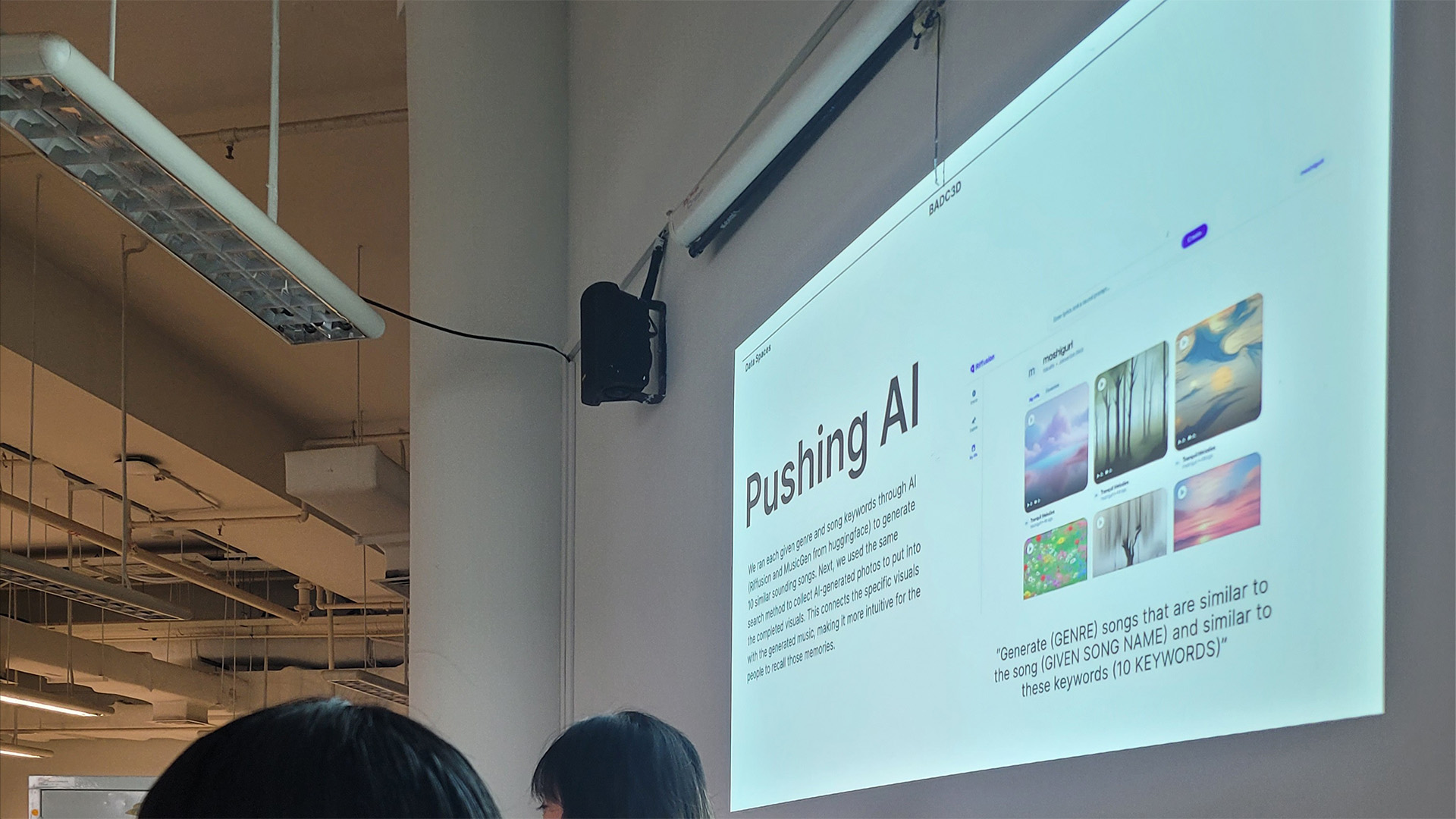

With that, we brought up some ideas about how we can push Artificial Intelligence. We thought of using the algorithm of how the people in the survey picked their songs and also about asking them what they do when they miss their loved ones. We will then ask AI to generate songs based on the keywords and song that was sent by the person. Using these songs, we will then generate a chart of songs that the person might enjoy listening to as they think of their loved ones.

AI Challenges - song generation

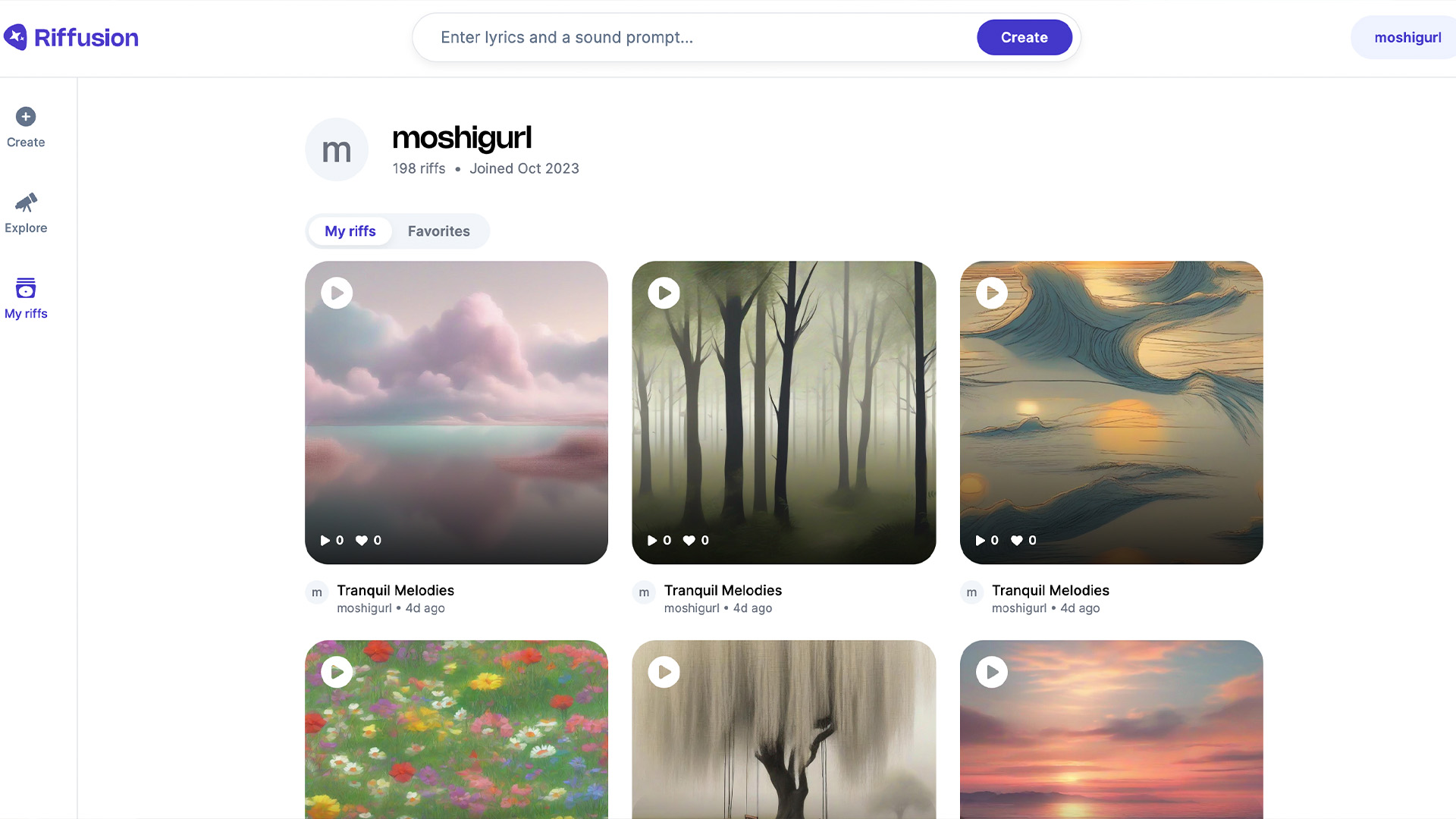

When starting on generating the songs, we decided on 10 songs per person so as to make it a fair playlist. Initially, we came across this new AI made by google, and wanted to try it out. (AI TEST KITCHEN) However after signing up, we were put on a waitlist with no deadline. After an endless search, I ended up using Riffusion and MusicGen from huggingface. Riffusion had only allowed us to generate 12 seconds of each song, while MusicGen allowed us to generate a maximum of 15 seconds per song. We searched each AI in this format: “generate (GENRE) songs that are similar to the song (GIVEN SONG) and similar to these keywords (KEYWORDS)”

AI Challenges - image generation

Before creating the images, we had discussions about the exact direction we should take. Because we initially suggested creating abstract images, but we hadn't discussed the specific level of abstraction. So, we made slight variations in the commands and created images. The first request was to create an abstract and symbolic image, and the second was a request to create a gradation background. The third one is a request to create a gradient image with colour codes symbolising keywords.

The first sites we used were IMAGINE and Canva. We agreed that the images created using this site had a more literal feel than the abstract. Therefore, we changed the AI image generator we mainly work with to wepik, and decided to generate three or more images and to choose the best one from them.

JS Challenges

Before specifying the final work program with TouchDesigner, it was a top priority to experiment with distorting images using JavaScript. So we uploaded dummy images and music to p5.js, and wrote a simple code that would apply a blur effect to the image as the volume of the music increased.

This was working, but we wanted to get the code to work independently of p5.js. Thus we attempted to create and run JavaScript and HTML based on the code we had worked on previously, with Visual Studio Code, but it did not function correctly. Failing to find any hints in the existing code or identify the bugs, we decided to delve into learning Touch Designer.

Moodboard Challenges

It was mentioned that one of the biggest concerns in our project is that the look and sound might be very weird together, firstly because of AI being a robot generating everything, as well as because of the distortion effect that we are trying to get with our visuals. An example of this would be Phoebe who mentioned in our survey form that she gets reminded of her dogs when she listens to kids songs. The AI had managed to generate the kids songs, however, the generated artworks might not match with the kids songs, especially if they are distorted by us.

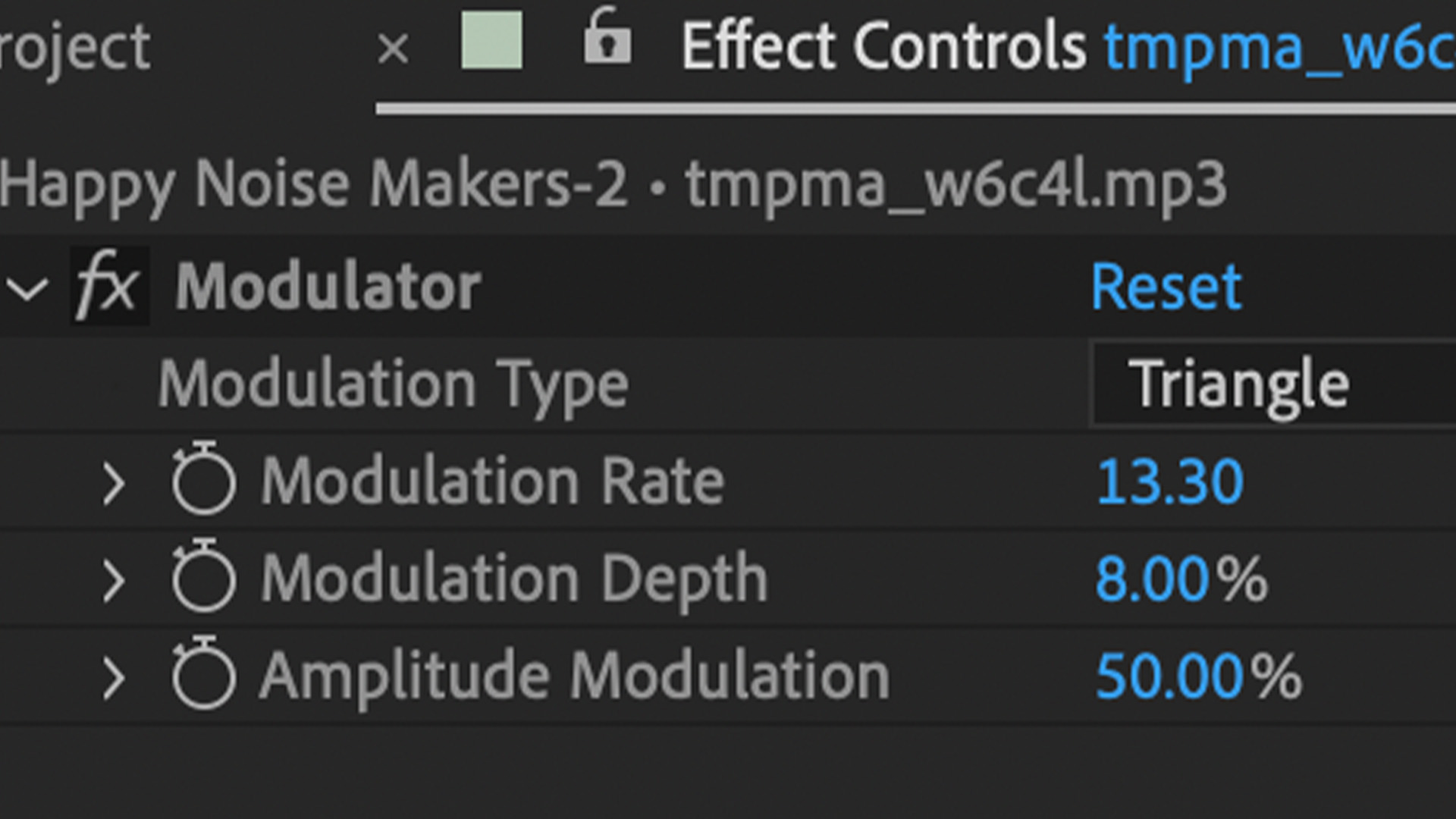

With this, we had to think about what we can do from here. We were thinking if it possible to distort the audio so that it matches the visuals too. However, the problem would then be that we are messing with the memory of the person and their loved ones. The person’s memory might not match our outcome. So with that, we had to ask ourselves how we can make our final outcome look like something that we want to achieve? We then tried distorting the songs and distorting the images to see how far we can get with that.

In the end, we felt that distorting the songs that AI generated was also like distorting the memory of the people that submitted their data. Hence, we decided to try other distortion methods that would not ruin the images and would still match with the music. This was done with the current slit scan and watercolour effect on Touch Designer.

Touch Designer Challenges - ParticlesGPU

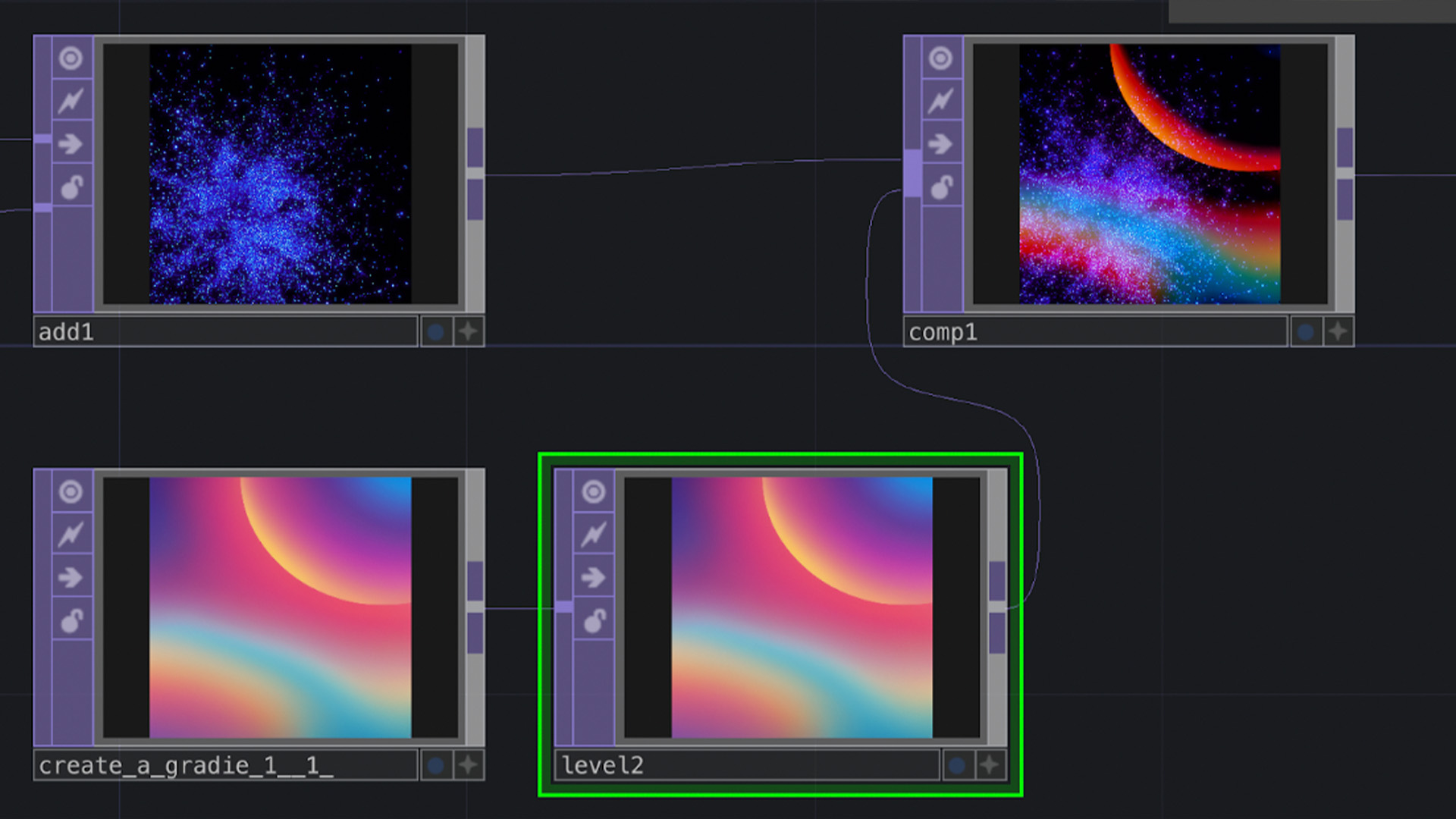

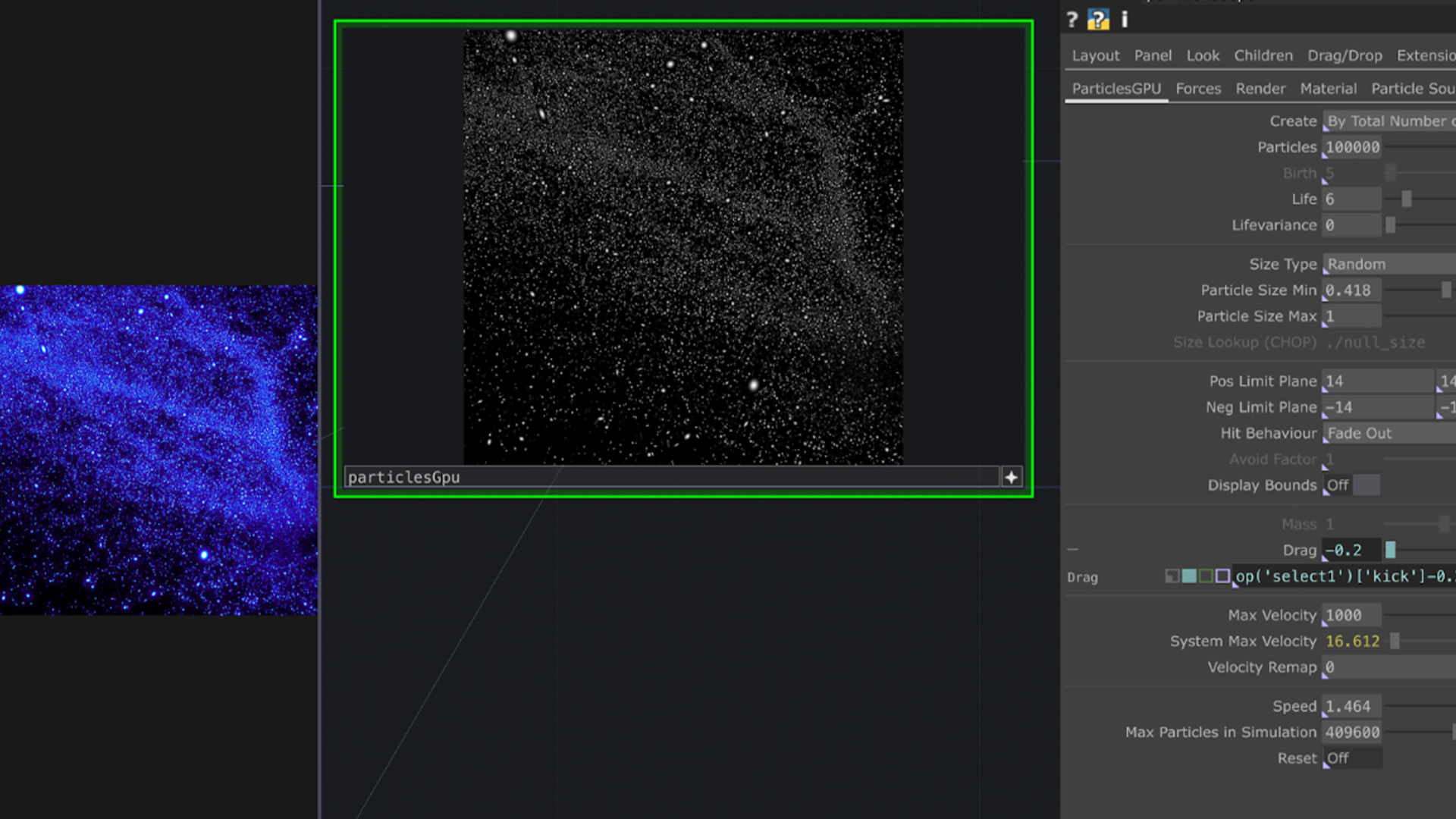

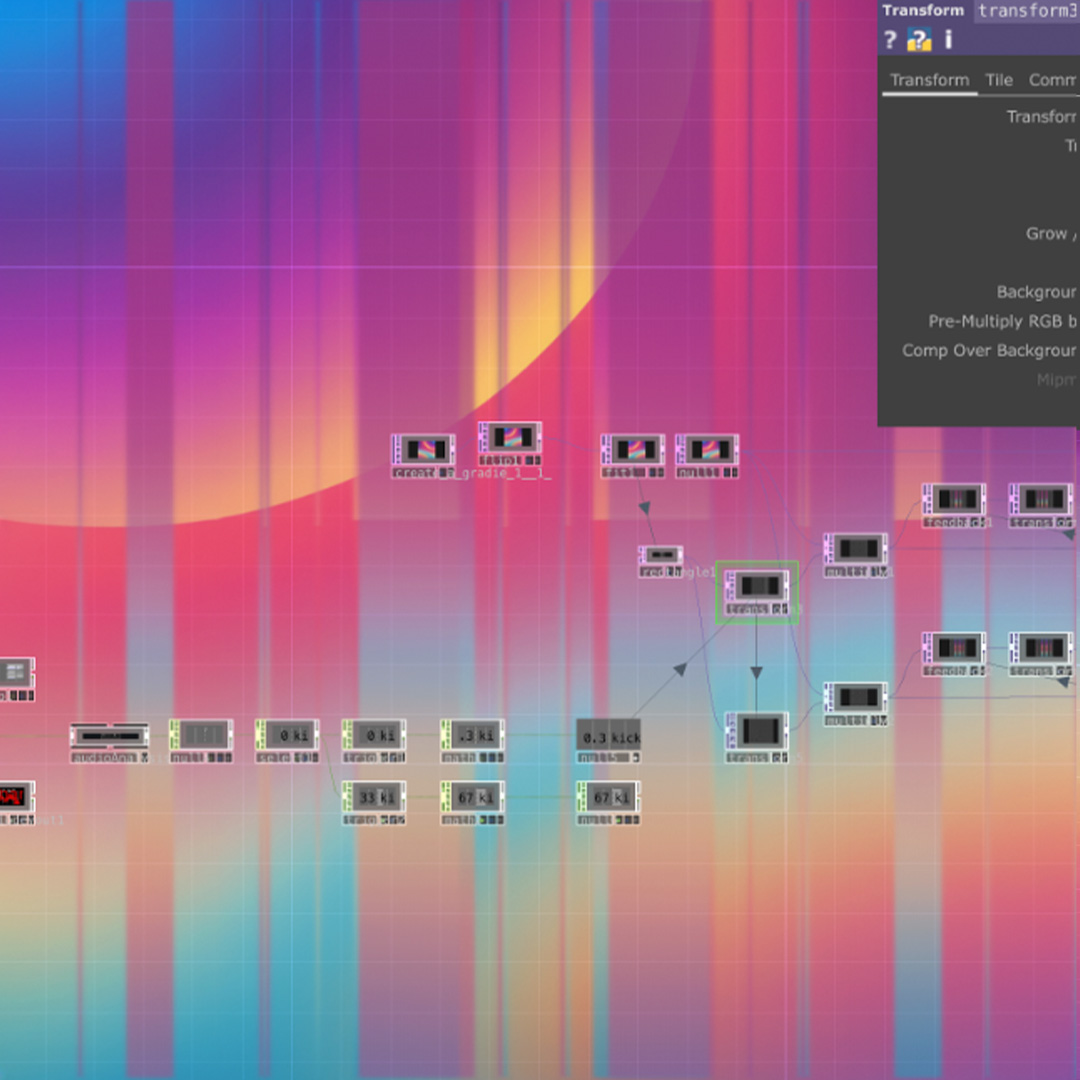

Having encountered the challenges mentioned above, we decided to delve into touch design. The fundamental framework involved creating moving particles using 'particlesGPU' and establishing a connection between particles and audio through 'audio analysis.'

First, ‘AudioAnalysis’ was combined with ‘particlesGPU’. We made it able to respond to several elements of audio, such as snare and kick, adjusted the values through ‘math’, and inserted them into the ‘drag’ or ‘velocity’ items of particlesGPU. By inserting this, the particles were set to be connected to the audio, and colours and images were applied using composite.

However, the outcome we aimed to achieve using ParticlesGPU was compatible with the 2020 version, yet we encountered a setback with the 2022 version currently in use due to the absence of a 'sand preset.' Consequently, the results differed from our expectations. Simultaneously, there was a prevailing sentiment that this outcome was far from our original intention of 'evoking memories through music and abstract images.' The particle effects were just a ‘popular’ form of audio reactivity artefact and did not provide a unique and meaningful outcome.

Touch Designer Challenges - Watercolour and Slit Scan

In light of these perspectives, we decided to pivot and integrate with the slit scan, initially planned, or watercolour, which effectively communicates abstract images of yearning and memory. This approach allowed us to craft a unique result by synergizing these two processes.

We created a watercolour outcome. After using noise effects, composite, and blur to make the image blur like watercolour, we copied and integrated the existing audio analysis. We connected the previously created audio analysis to the feedback pulse and set it up so that the image responds to the kick's values. Because the watercolour outcome created was video, it was able to respond better to slit scan. Using the composite's over mode, small rectangles were placed on the photo and made to flow. The combined file completed in this way was saved in mp4 format.

“The greater the obstacle, the more glory in overcoming it.”

― Molière

Feedback

Halfway into the project, we had 2 main feedbacks that affected our work. Firstly, some people had feedback that they did not feel comfortable sharing their loved ones pictures as they felt that it was downplaying their loved ones, especially if the images were going to be distorted.

Secondly, Andreas feedback that many songs can only be used for personal use and cannot be uploaded unless it is less than 5 seconds. However, Juwai searched it up and it showed that not even 1 second can be reuploaded online. Both the feedback were taken into consideration and we changed our project to focusing entirely on AI generation.

Achievements

We were able to generate emotions from the keywords we collected into visuals and sounds through Generative AI. We turned these visuals and sounds into a practical and usable tool in the form of an app.

As we work with users' emotions, we delve deeper into ways to communicate them more practically. Through this project, we were able to look at areas where current AI needs to be further developed, such as the uncanny valley. We also had time to explore practical design using programs such as Touch Designer and Adobe XD.