Process

From our ideation to the final outcome, our group went through thick and thin, changing ideas along the way to come up with new solutions that are able to allow everyone to comfortably use our application – Melodic Memories.

Join us on our journey as we show you our thought process, from Ideation, to Approaches, to Data Collection and finally to the Artefact.

The Ideation Stage

Music has a profound ability to evoke emotions, trigger memories, and connect us with our loved ones. Certain songs become linked with specific events or people in our lives. When we hear these songs, they trigger memories associated with those moments or individuals. Music also has the power to convey complex emotions. Hearing a familiar song can instantly evoke feelings of joy, sadness, love, or nostalgia, especially when it reminds us of someone that we love dearly. Music-evoked nostalgia has been identified as linking to solace and comfort, where memories of significant people or times that brought happiness provide calm and comfort, creating a sense of safety and acceptance.

This has made us interested to explore how one song and a few keywords can intertwine with the reminiscence of people that someone holds to their heart dearly. We decided to push AI to generate a music playlist and visuals from the single recommended song and human emotions. Using coding to further develop the visuals to match with the sound waves.

Proposed objective

Our innovative generative art project aimed at exploring the heartfelt emotional connection between music and our cherished memories of our loved ones. Through this project, we are inviting participants to share their precious songs that are intertwined with the reminiscence of people that they hold to their heart dearly. With generative art, these melodies will come to life visually, each with an interesting story to tell.

Music plays a role in memory formation, hence we often associate certain songs with someone we love. Listening to music can evoke memories of moments spent with that special person, providing a means to revisit their presence, especially when physical distance, separation, or other circumstances have created a sense of distance or parting.

This project aims to complement the longing of those who cannot physically touch their "beloved person" and to rekindle our love for them.

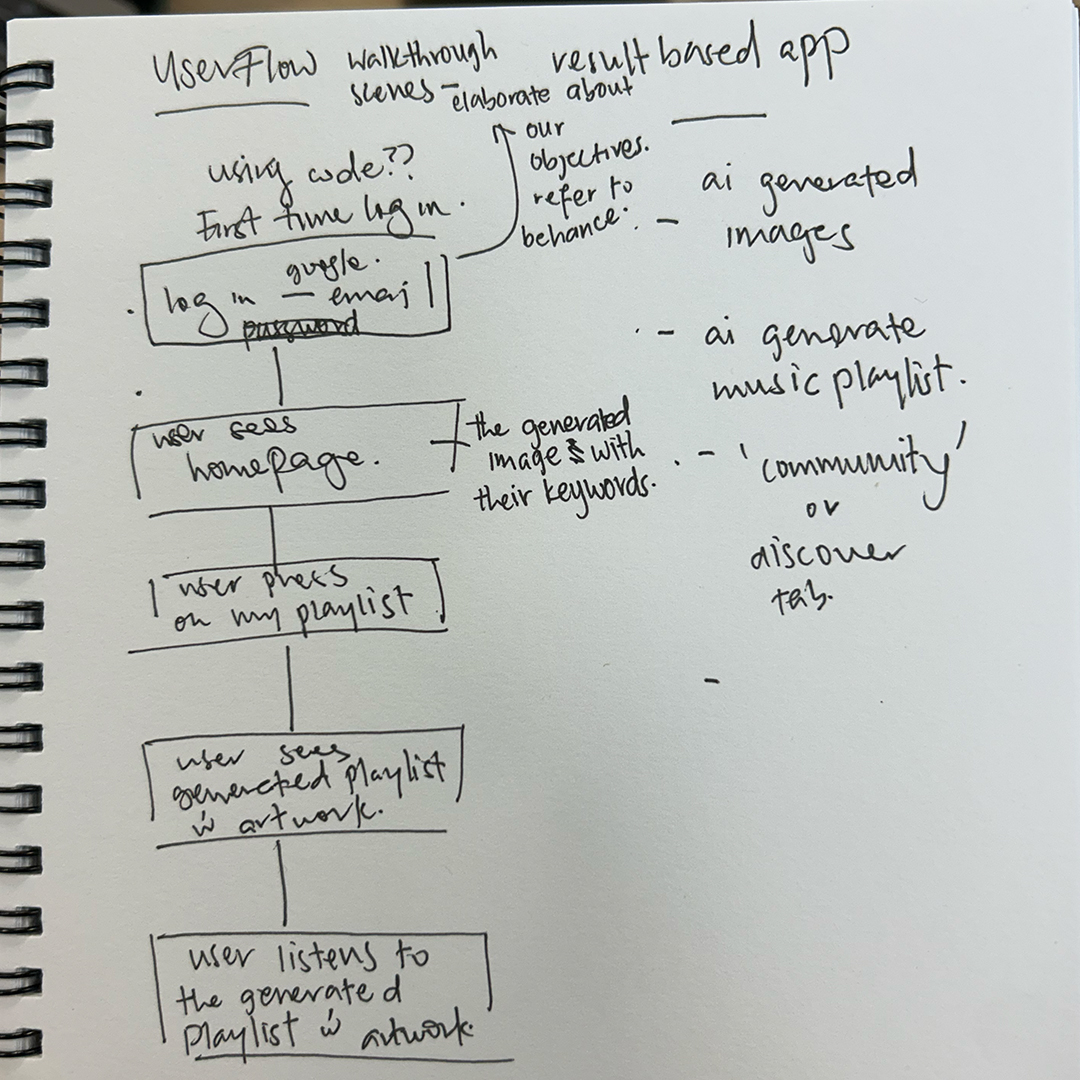

Approaches and Parameters

Firstly, we collected data through a Google Form with their emails included, asking people for a certain song that evoked their memories of their loved ones, the genre of the song and the 10 keywords that represent the specific song. We also asked for another 10 keywords that describe their memory of their loved ones.

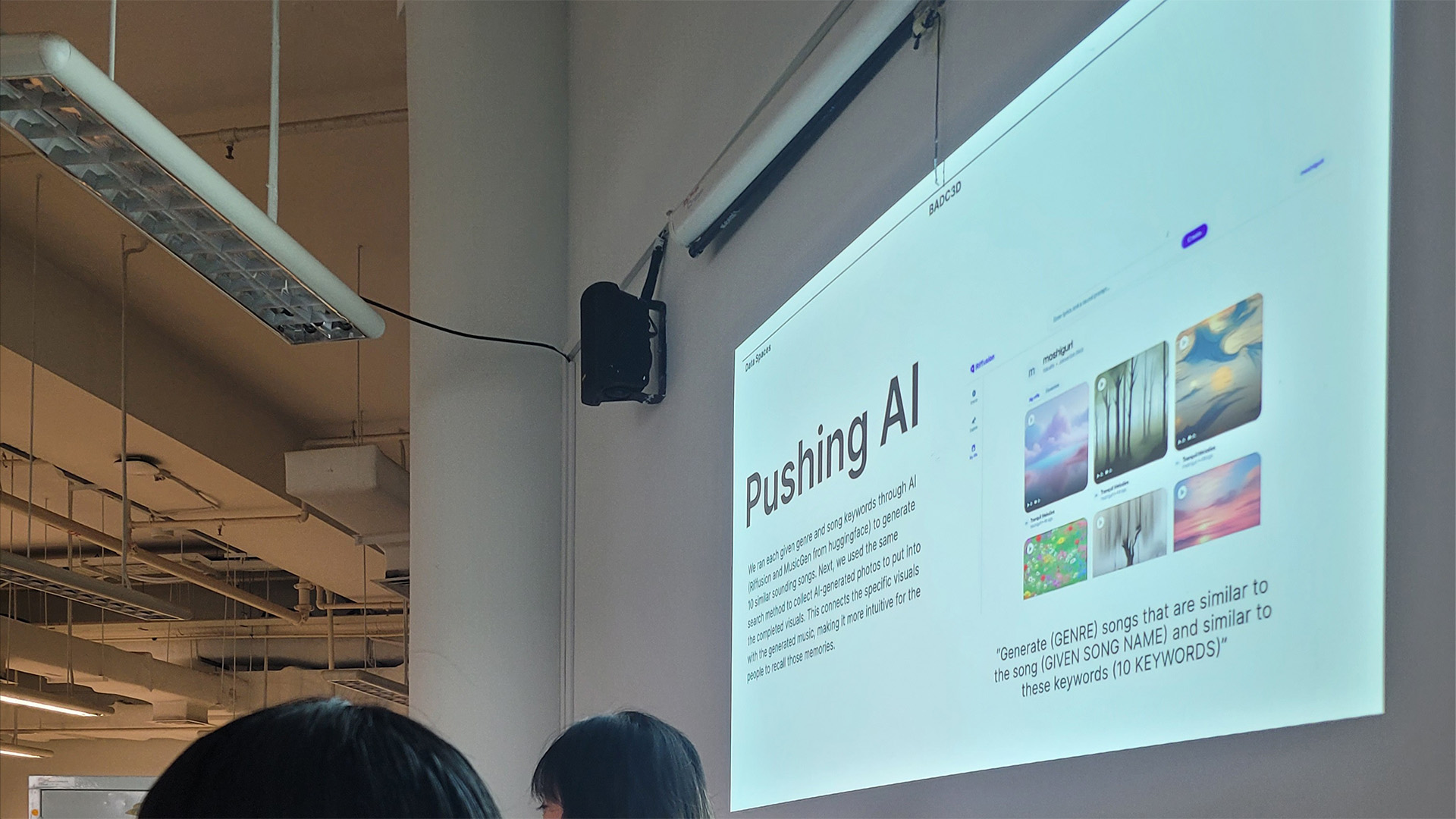

Using the collected data, we ran each given genre and song keywords through AI (Riffusion and MusicGen from huggingface) to generate 10 similar sounding songs. Next, we used the same search method to collect AI-generated photos to put into the completed visuals. This connects the specific visuals with the generated music, making it more intuitive for the people to recall those memories.

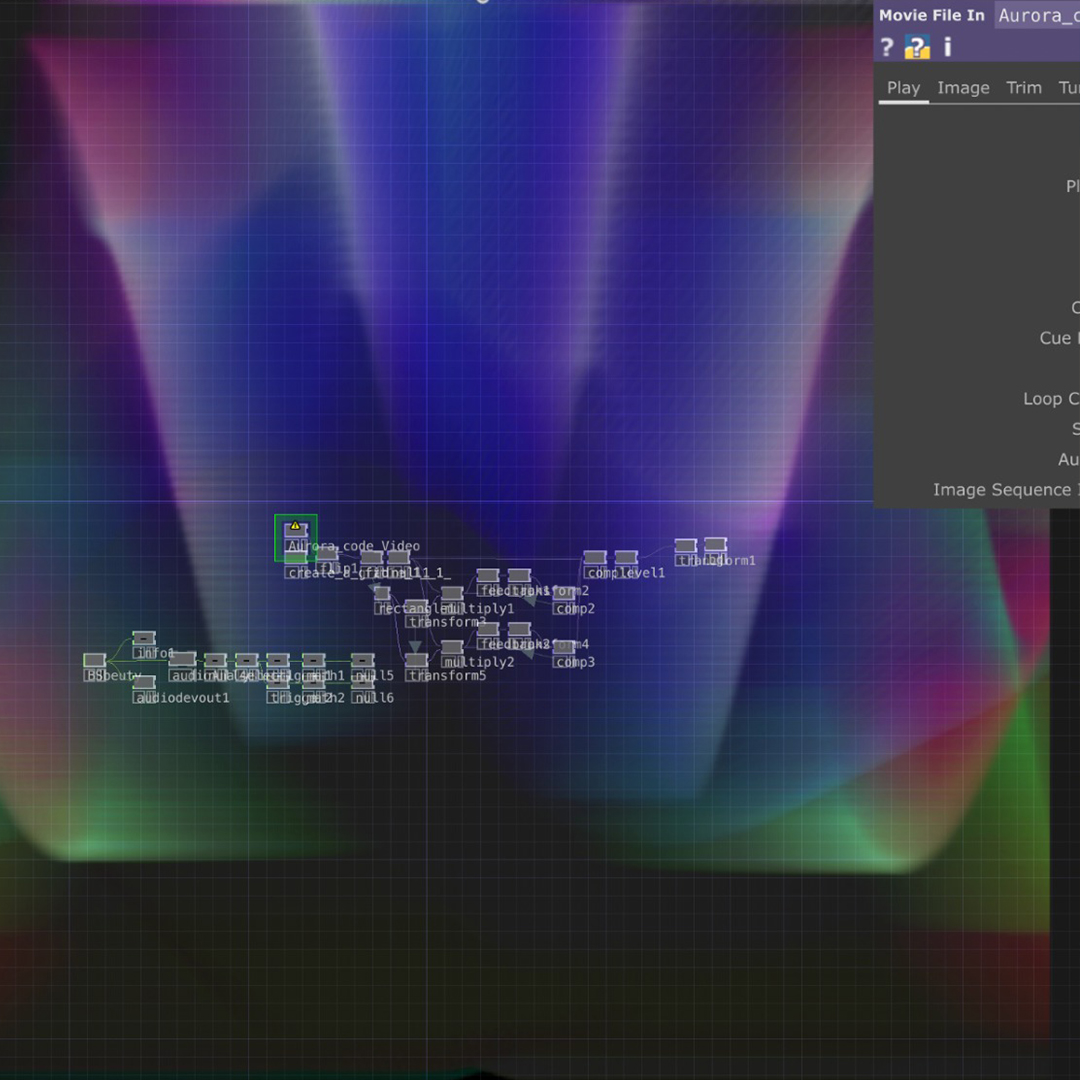

We ran the 10 memory keywords through AI (Imagine, Gencraft and Wepik) to generate pictures of their memory. Afterwards, we input the pictures into Touch Designer and created distorted visuals with slit scanning. The distorted slit scanning serves the purpose of visually representing the abstract emotions associated with the music and the act of reminiscing.

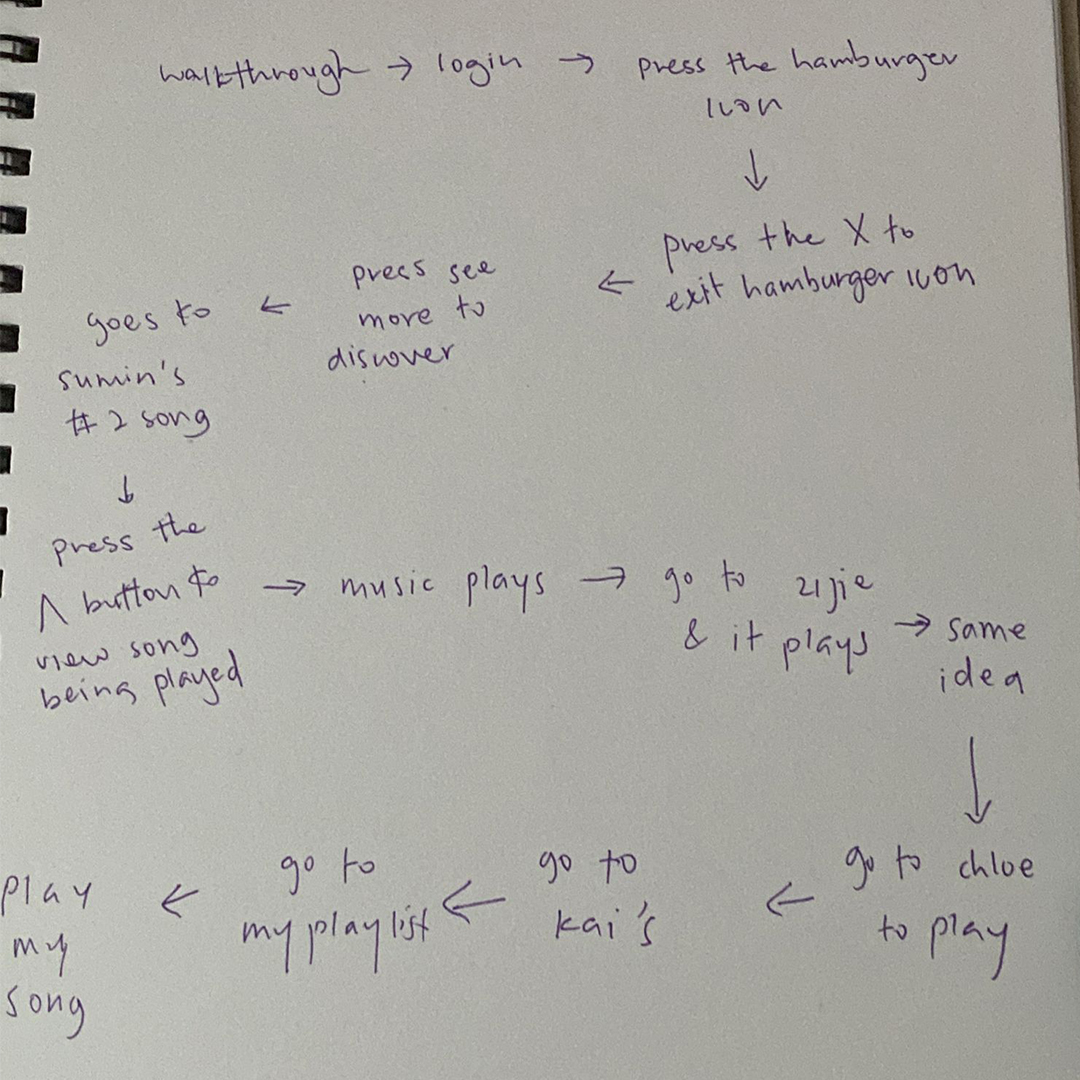

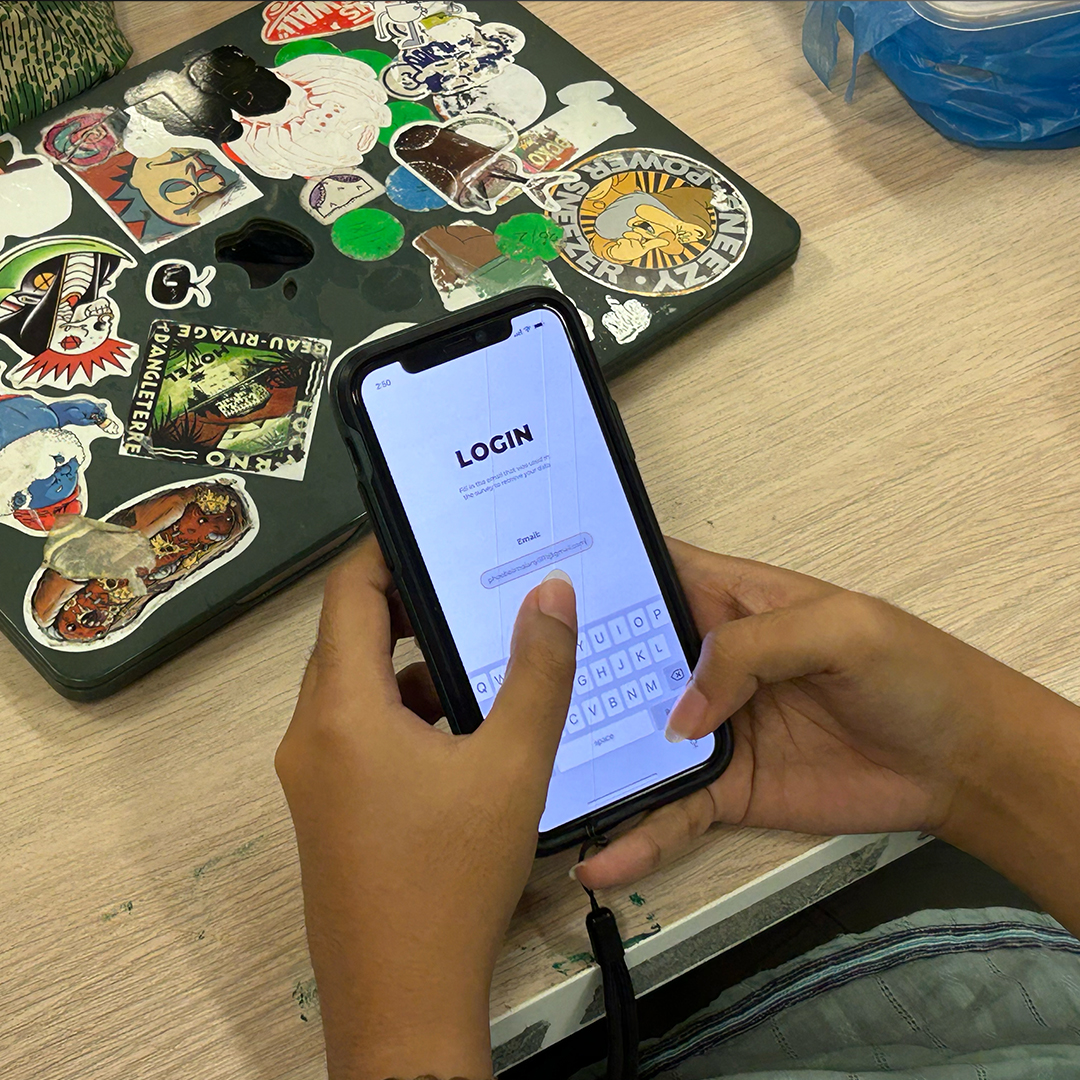

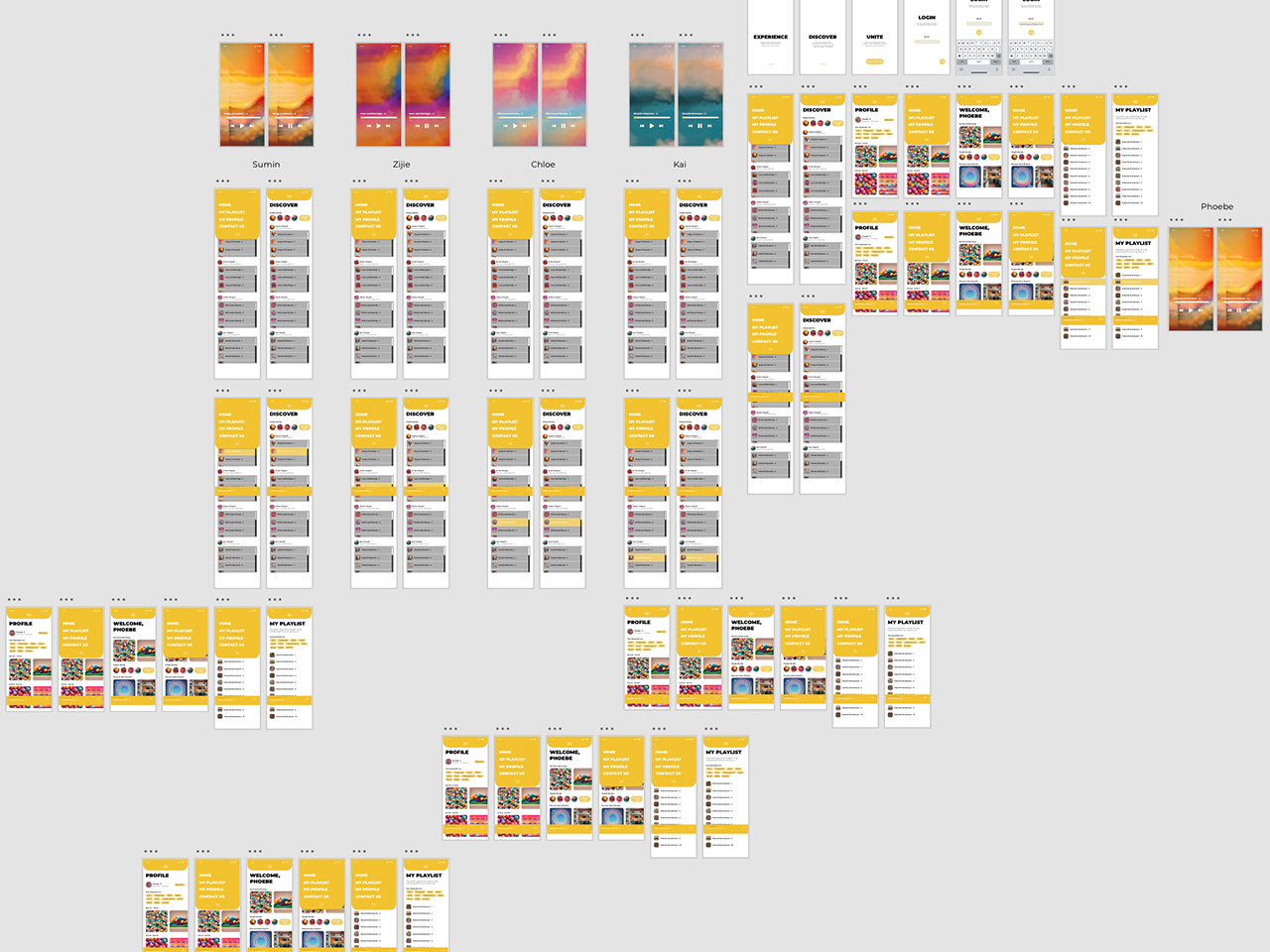

Lastly, we created an application named Melodic Memories. This application can be logged in through the email that was submitted to us during our data collection. Entering into our app, users will be able to access their curated playlists. These curated playlists will preferably reload and increase every few weeks. While listening to the songs, the unique visuals will be playing on their screens. Users will also be able to discover the playlists of other people that are nearby them, at the same time experiencing their visuals and know briefly about the person behind the memories.

Data collected

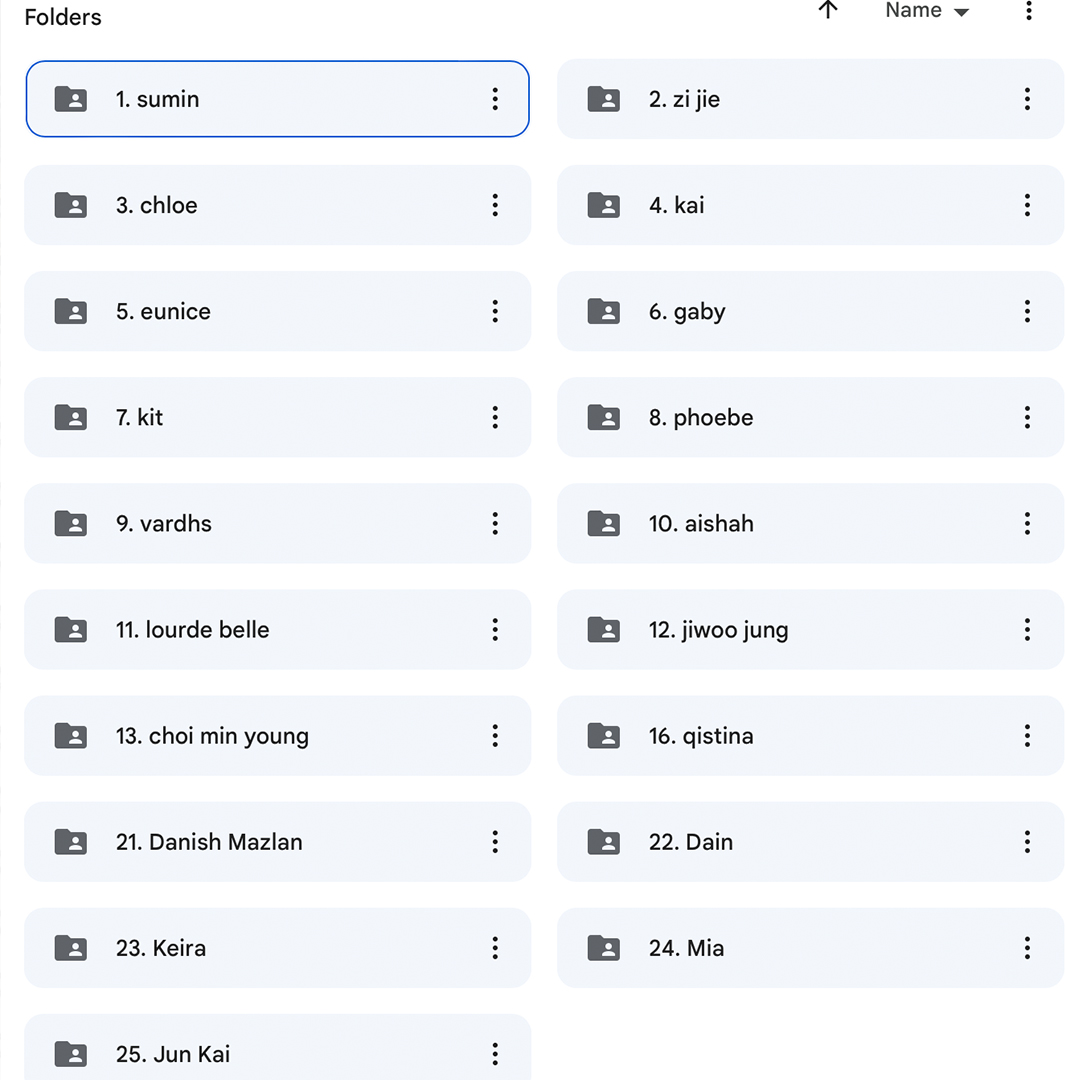

We collected data through a Google Form with their emails included, asking people for a certain song that evoked their memories of their loved ones, together with the genre of the song and the 10 keywords that represent the specific song.

We also asked them for another 10 keywords that describe their memory of their loved ones. The email collection is necessary because that would be the method for them to login to our application. This is our final collected data with over 20 data collected from people.

This is the link to the spreadsheet that consist of all the data collected. (click to view our data spreadsheet)

Artefact

Accessible through the email provided during data collection, Melodic Memories is a user-friendly application. Upon logging in, users gain access to their personally curated playlists, with a preference for periodic updates and expansions. As users enjoy their carefully curated tunes, with distinctive visuals accompany the music on their screens. Additionally, the app allows users to explore playlists from individuals nearby, offering a glimpse into other people's musical experiences and a brief introduction to the memories they hold dear.

At the start, we decided to use Figma to create the application due to its user-friendly and collaborative features but along the way, we realised we could not figure out how to include the video and the playback aspect. As such, we ended up using Adobe XD to finish the application.

"Learning never exhausts the mind."

- Da Vinci

Conclusion

Our approach to expressing the theme of yearning for a loved one was straightforward. We opted for an intuitive method, translating our message practically through the mediums of video, music, and an app. In doing so, we aimed to showcase that AI could seamlessly integrate into our daily lives, offering assistance in a warm and human-centric manner rather than a cold and detached one.

Despite the overall success of our AI-driven work, there were some noticeable challenges. For instance, in the realm of AI-generated music, we encountered difficulties in its compatibility with Touch Designer's audio analyzer. Additionally, as non-professionals in app development, we faced issues such as the bar falling out of sync during music playback.

To address these challenges, it became apparent that enhancing the quality of AI-generated content or employing a multi-step approach to AI music creation could be effective solutions. Exploring various mobile app programs beyond Figma and Adobe XD also emerged as a promising avenue for refining our app's performance.

Click here to check out the rest of the AI-generated music and visuals.